Cloud-native group chat with multiple AI Agents in .NET - Part 1: Setting up

In April 2025, the .NET AI Template went into preview phase two:

I thought this could be a fantastic possibility to try the idea of a group chat with multiple AI agents.

Let’s get started by bootstrapping the template exactly as shipped first.

After installing the template, we walk through the wizard. We create a project called MultiAgentChatPoC with the following configuration:

dotnet new aichatweb --output MultiAgentChatPoC --provider githubmodels --vector-store local --aspire true

Notice that we are adding Aspire Orchestration support. This is what will enable our project to run “cloud-native”. It’s a good practice to use Aspire.

Now we create a new repo and push our code.

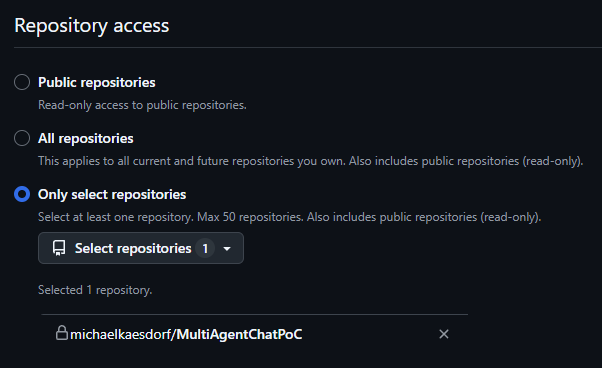

We will be using Github Models along the way. For this, we first need to create a personal access token (PAT) in github. So, after navigating to github and authenticating, we access Settings -> Developer Settings -> Personal Access Tokens -> Fine-grained Tokens. Here we limit the scope of the PAT to the freshly created repo that we just created:

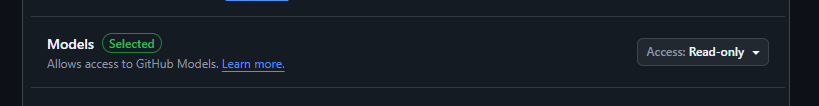

There, we scroll down and add read-only access to “Models”:

Now we will need to add the PAT to the secrets of the AppHost-project like that:

cd ModernDotNetShowChat.AppHost

dotnet user-secrets set ConnectionStrings:openai "Endpoint=https://models.inference.ai.azure.com;Key=YOUR-API-KEY"

Ok, let’s hit start!

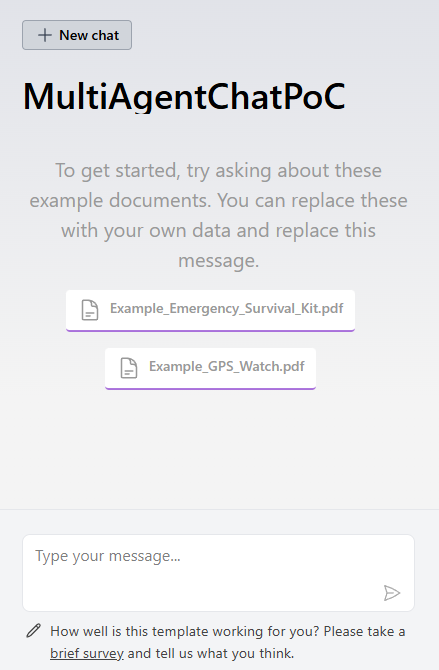

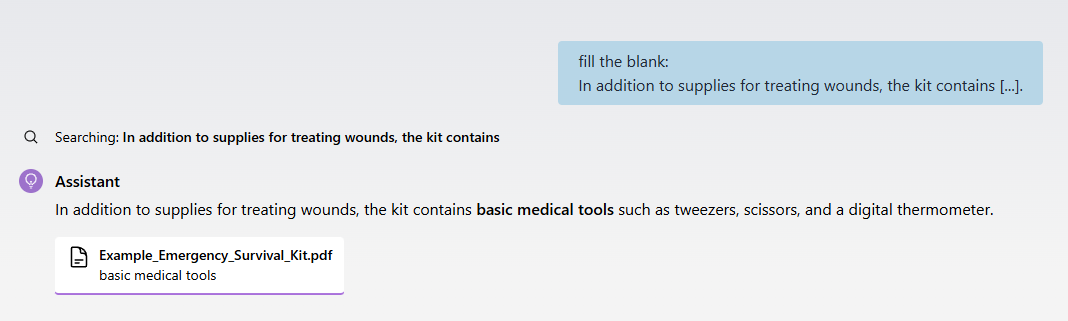

Great, as we can see, the app starts and we are presented with a chat-like interface. Let’s test it by typing something. And here is a proof that it is working:

Alright. Now we are set up and ready to go. Here we will end part one. Feels good to have something done. For the next post, we will take a small step back and think about the architecture.